Generative AI and the Diminishing Returns of Scale: Are We Nearing the Plateau?

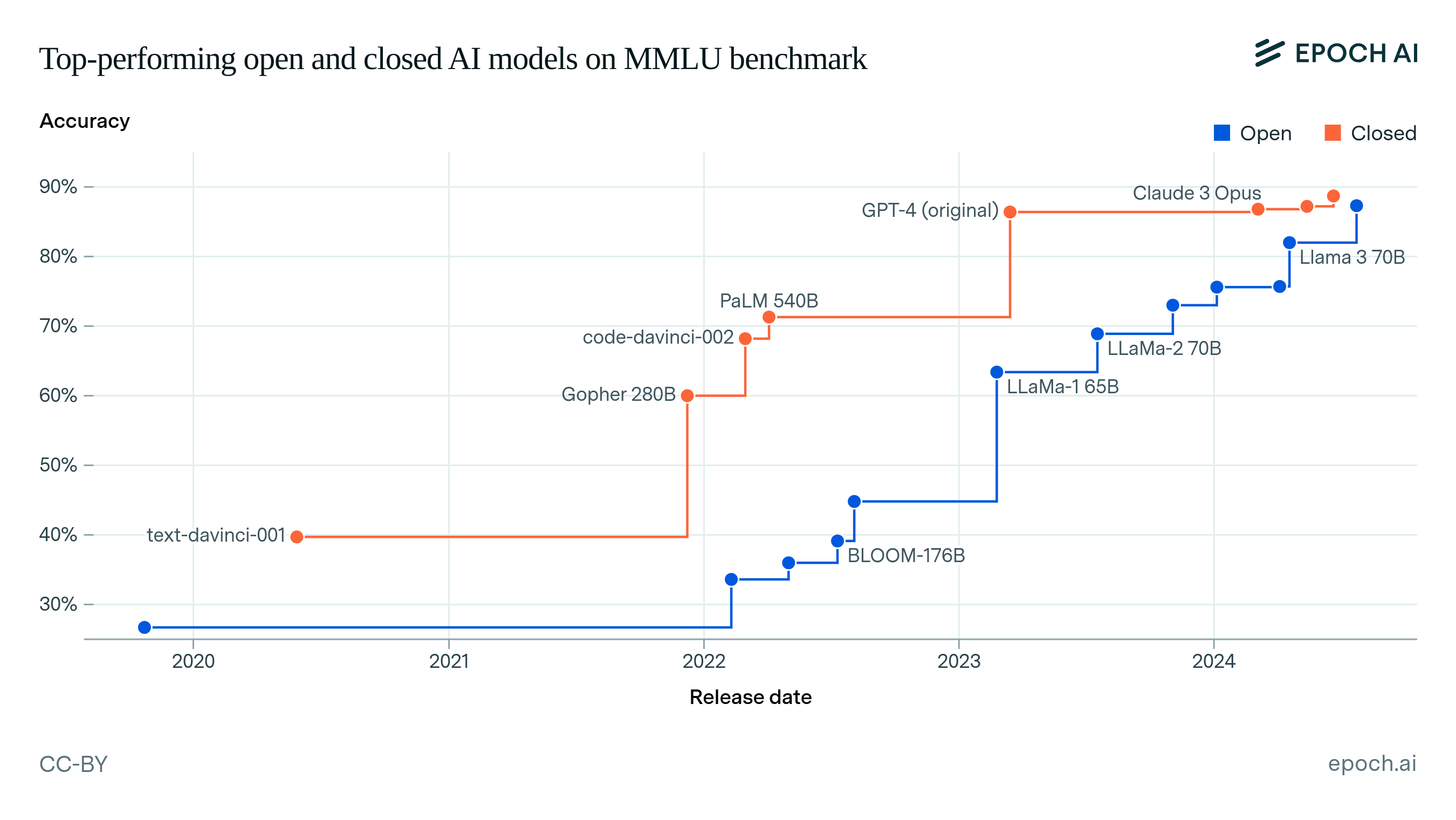

Generative AI has surged in capability by training massive models on colossal datasets - think OpenAI’s GPT series for text, DALL-E for images, and GitHub Copilot for code. However, new evidence suggests these size-driven improvements may be approaching a plateau, yielding smaller gains despite bigger models. Rather than straightforwardly scaling up further, the AI field is witnessing diminishing returns, prompting a search for new strategies beyond raw brute force. Techniques like retrieval-augmented generation and more efficient architectures are taking center stage, while multimodal and collaborative approaches promise novel breakthroughs. Beyond technical constraints, the sustainability and ethics of giant AI models are in sharp focus. High energy use, concentrated model ownership, and alignment challenges underscore the need for more responsible innovation. All told, generative AI continues to expand its reach, but optimising its potential without relying solely on unsustainable scaling has become the key puzzle for researchers, policymakers, and developers.